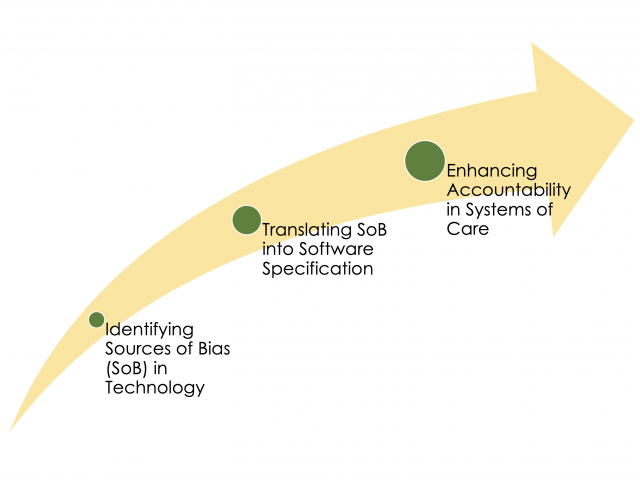

Social services, traditionally, have been organized around their missions, such as education or safety or health. A newer approach, called "wrap-around services" or "systems of care," organizes services around individuals and their specific context and needs. These systems face many challenges when applied in real-world settings. Application processes often focus more on the potential of technologies and less on the realities, histories, and needs of communities. The proposed research addresses this gap by evaluating the implementation of a system of care in a real-world setting. The research involves studying how civic participation may be better supported and bias reduced in the development and integration of systems of care for communities. A key outcome of this proposal is to understand the influence of processes inspired by justice, equity, diversity, and inclusion to intentionally check bias and reduce disparities in the design and application of public service provisioning software systems. The goal of the proposed work is to advance accountable software systems through developing generalizable and localizable practices for exploring how to identify specific and systemic sources of bias, improve public service provisioning outcomes, and minimize disparities from biased program outcomes.

The proposed work builds from existing theory and the project team’s experience in designing and executing software systems that support services to the public. The intent is to identify and map the different types of threats to accountability that can be anticipated within the socio-computational ecosystem of care services. The team is studying dynamics of how bias might propagate across the system through regulations, social contexts, data, and lack of representation in the software development process, among other factors. Once such dynamics are mapped, the team will explore how to attend to bias through a series of interventions planned and conducted in collaboration with community members. These will include mapping the system collaboratively, and holding knowledge convergence workshops, algorithmic impact assessments, and using computational analytic techniques that can augment governance opportunities in the design and deployment of these systems of care. An outcome measure to be tracked through this process is the effectiveness of a system at responding to individual-level threats and creating bias immunity at the systems level through shared stewardship and accountability. The type of products expected from this research are advances in theory (articulation of novel threats, new understandings of dynamics & treatments), specific products related to an algorithmic accountability policy toolkit, and guidelines for practice customizable to local use cases through generalizable convergence workshops.

*The PIs thank the National Science Foundation (NSF) DASS program for funding this project (#2217706).

*This project has been terminated on 4/25/2025, due to the change of NSF and adminsitration prioirities.